Web Scraping with Rust

I previously wrote a Node.js program to archive blog content from a Blogger website as JSON data. I've never particularly enjoyed working with JavaScript, but even once the program was working as I intended I noticed it was using a lot of system resources. Containerizing and deploying it also involved a ton of overhead and a bloated .tar archive owing to the fact that Node.js had to be installed as a dependency. For all of these reasons, I was eager to rewrite this using Rust.

My original JS implementation used the Puppeteer library to run a headless browser and "click" on links to older posts. I was intially unsure of how I could emulate this using Rust. It turns out there are great crates to do this but in the end I could eliminate the need for any headless browser and work only with HTML.

Necessary Helper Methods

- As I worked on this project I experimented with using a variable number of threads in order to speed up the process of downloading HTML content and parsing what I needed. I found that if I used too many threads, Blogger would throttle my requests and some posts wouldn't be reliably serialized. For this reason, I added some logging logic to monitor success and failures until I found a reliable number of threads to work with.

- I also created functions to abstract the process of getting a post as an HTML string and then find in that string a link to older posts.

- I wasn't sure how I would be sorting posts. The blog's author has skipped some numerical ids and so not all posts have ids. That being said, for those posts that do have ids, they are embedded into the title, and so I wrote a function to extract this.

- Lastly I wrote a function to write the Vec of blog posts to a file in JSON format.

pub fn create_log_file() -> Result<Arc<Mutex<File>>, Box<dyn std::error::Error>> {

let log_file = Arc::new(Mutex::new(

fs::File::create("scrape_blogger.txt").unwrap_or_else(|e| {

eprintln!("Failed to create log file: {}", e);

std::process::exit(1);

}),

));

println!(

"scrape_blogger.txt log file created successfully at {}",

env::current_dir()?.display()

);

Ok(log_file)

}

pub fn fetch_html(url: &str) -> Result<String, Box<dyn std::error::Error>> {

let response = get(url)?.text()?;

Ok(response)

}

pub fn find_older_posts_link(document: &Html) -> Option<String> {

let older_link_selector = Selector::parse("a.blog-pager-older-link").unwrap();

document

.select(&older_link_selector)

.filter_map(|a| a.value().attr("href"))

.map(String::from)

.next()

}

pub fn extract_id_from_title(title: &str) -> Option<String> {

let re = Regex::new(r"\((\d+)\)$").unwrap();

re.captures(title)

.and_then(|cap| cap.get(1).map(|m| m.as_str().to_string()))

}

pub fn write_to_file(data: &[Post], file_path: &str) -> Result<(), Box<dyn std::error::Error>> {

let path = Path::new(file_path);

let file = File::create(path)?;

file.lock_exclusive()?;

let json_data = serde_json::to_string_pretty(data)?;

fs::write(path, json_data)?;

file.unlock()?;

println!("Data written to {}", file_path);

Ok(())

}

- I decided it would be most reliable to sort posts by date, and created methods to do so in ascending and descending order.

-

Rust also has a

reversed()method that can be called on a Vec, effectively transforming a Vec sorted in one order into the reverse order. This could have been used instead of one of the functions shown here, and would probably be the "rusty" way to do this.

pub fn sort_backup(backup: &mut Vec<Post>) -> Result<(), Box<dyn std::error::Error>> {

let re = Regex::new(r"(\d{1,2} \w+ \d{4})").unwrap();

backup.sort_by(|a, b| {

let a_date = a.date.as_ref().and_then(|d| {

re.captures(d)

.and_then(|cap| NaiveDate::parse_from_str(&cap[1], "%d %B %Y").ok())

});

let b_date = b.date.as_ref().and_then(|d| {

re.captures(d)

.and_then(|cap| NaiveDate::parse_from_str(&cap[1], "%d %B %Y").ok())

});

b_date.cmp(&a_date) //desc

});

Ok(())

}

pub fn sort_backup_asc(backup: &mut Vec<&Post>) -> Result<(), Box<dyn std::error::Error>> {

let re = Regex::new(r"(\d{1,2} \w+ \d{4})").unwrap();

backup.sort_by(|a, b| {

let a_date = a.date.as_ref().and_then(|d| {

re.captures(d)

.and_then(|cap| NaiveDate::parse_from_str(&cap[1], "%d %B %Y").ok())

});

let b_date = b.date.as_ref().and_then(|d| {

re.captures(d)

.and_then(|cap| NaiveDate::parse_from_str(&cap[1], "%d %B %Y").ok())

});

a_date.cmp(&b_date) //asc

});

Ok(())

}

- Some blog posts had shared ids, I wanted to get a list of these to share with the author.

- Collections in Rust are incredible, the HashMap type has a method for working with entries that allows a lot of flexibility.

pub fn find_duplicates(backup: &[Post], logfile: Arc<Mutex<File>>) {

print!("Chcking for duplicate post ids...");

let mut id_counts = HashMap::new();

for post in backup {

if let Some(ref id) = post.id {

*id_counts.entry(id.clone()).or_insert(0) += 1;

}

}

let duplicates: Vec<_> = id_counts.iter().filter(|&(_, &count)| count > 1).collect();

if duplicates.is_empty() {

println!("No duplicates found");

} else {

println!(

"{} duplicates found, see log.txt for details",

duplicates.len()

);

let mut log = logfile.lock().unwrap();

for (id, count) in duplicates {

writeln!(log, "[DUPLICATE] ID: {} was found {} times", id, count).ok();

}

}

}

- I also wrote a function to find those posts that did not have an id.

- Again, collections in Rust come with some powerful methods built in that make this very succinct.

pub fn find_missing_ids(backup: &[Post], logfile: Arc<Mutex<File>>) -> Result<(), Box<dyn std::error::Error>> {

println!("Checking for posts with missing ids...");

let ids: Vec<usize> = backup.iter()

.filter_map(|post| post.id.as_ref()?.parse::<usize>().ok())

.collect();

let num_ids = ids.len();

let expected_nums: HashSet<_> = (0..=num_ids).collect();

let actual_ids: HashSet<_> = ids.into_iter().collect();

let mut missing_ids: Vec<_> = expected_nums.difference(&actual_ids).cloned().collect();

let mut posts_without_ids: Vec<&Post> = backup.iter().filter(|post| post.id.is_none()).collect();

sort_backup_asc(&mut posts_without_ids)?;

missing_ids.sort();

if missing_ids.is_empty() {

println!(

"No missing ids were found. Consecutive ids found from 0 to {}",

num_ids

);

} else {

println!(

"{} posts were found to be missing ids, see log.txt for details",

missing_ids.len()

);

let mut log = logfile.lock().unwrap();

for (missing_id, post) in missing_ids.iter().zip(posts_without_ids.iter()) {

writeln!(log, "[MISSING] ID: {} may be assigned to post with title {:?}", missing_id, post.title).ok();

}

}

Ok(())

}

Scraping Methods

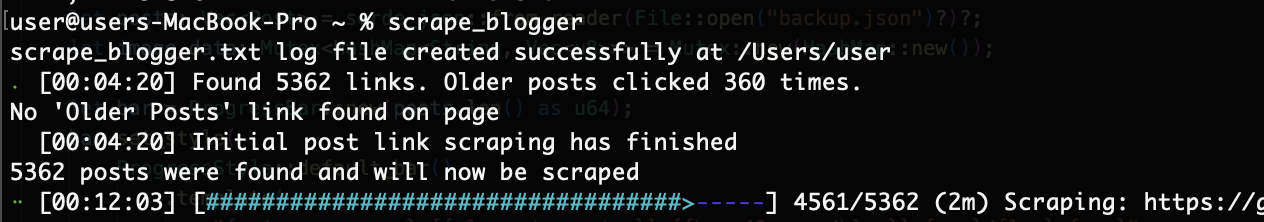

The file below contains methods used for scraping blog content. At a high level the main Blogger landing page for a specific blog is provided. This page is scraped such that links to posts (determined by matching a RegEx pattern) are stored in a HashSet and other links are discarded. This main page also contains a link to older blog posts, and the helper function shown earlier is used to get this link for the main page and every subsequent page until the final page is encountered which is missing such a link.

When this HashSet is finalized the program begins visiting each post link and serializing the HTML content into a Post struct:

#[allow(non_snake_case)]

#[derive(Serialize, Deserialize, Debug, Clone)]

struct Post {

id: Option<String>,

title: String,

content: String,

URL: String,

date: Option<String>,

images: HashSet<String>,

}

The code shown below is made considerably more verbose by adding a progress indicator to inform the CLI user of its progress and also by logic used to implement parallel threads. Initially I used Rayon to abstract away the threading logic, but I found I needed to use a specific amount of threads in order to avoid my requests being throttled by Blogger and ultimately decided to allow the number of threads to be passed as a command line argument.

One optimization I would like to make here is to begin fetching individual posts as soon as their links are scraped from the pages listing all posts. I know how to do this using Go channels, and I know Rust has something similar, but I have not experimented with this yet.

use super::helpers;

use crate::Cli;

use crate::Post;

use indicatif::{MultiProgress, ProgressBar, ProgressStyle};

use rayon::prelude::*;

use rayon::ThreadPoolBuilder;

use regex::Regex;

use scraper::{Html, Selector};

use std::collections::HashSet;

use std::fs::File;

use std::io::Write;

use std::sync::{Arc, Mutex};

use std::thread;

use std::time::Duration;

const MAX_RETRIES: u32 = 4;

const RETRY_DELAY: Duration = Duration::from_secs(1);

pub fn search_and_scrape(

args: Cli,

error_written: Arc<Mutex<bool>>,

log_file: Arc<Mutex<File>>,

base_url: &str,

) -> Result<Vec<Post>, Box<dyn std::error::Error>> {

let post_links: HashSet<String> = if args.recent_only {

scrape_base_page_post_links(base_url)?

} else {

scrape_all_post_links(base_url)?

};

let mp = MultiProgress::new();

let pb = mp.add(ProgressBar::new(post_links.len() as u64));

pb.set_style(

ProgressStyle::default_bar()

.template("{spinner:.green} [{elapsed_precise}] [{bar:40.cyan/blue}] {pos}/{len} ({eta}) {msg}")?

.progress_chars("#>-"),

);

let backup = Arc::new(Mutex::new(Vec::new()));

let progress = Arc::new(pb);

println!(

"{} posts were found and will now be scraped",

post_links.len()

);

let pool = ThreadPoolBuilder::new()

.num_threads(args.threads)

.build()

.unwrap();

pool.install(|| {

post_links.par_iter().for_each(|link| {

progress.set_message(format!("Scraping: {}", link));

match fetch_and_process_with_retries(link, log_file.clone()) {

Ok(post) => {

let mut backup = backup.lock().unwrap();

backup.push(post);

}

Err(e) => {

let mut err_written = error_written.lock().unwrap();

*err_written = true;

let mut log = log_file.lock().unwrap();

writeln!(

log,

"[ERROR] Failed to scrape post: {} with error: {:?}",

link, e

)

.ok();

}

}

progress.inc(1);

});

});

progress.finish_with_message("All posts processed!");

let backup = Arc::try_unwrap(backup).unwrap().into_inner()?;

Ok(backup)

}

pub fn extract_post_links(document: &Html) -> Result<HashSet<String>, Box<dyn std::error::Error>> {

let div_selector = Selector::parse("div.blog-posts.hfeed").unwrap();

let a_selector = Selector::parse("a").unwrap();

let regex =

Regex::new(r"^https://gnosticesotericstudyworkaids\.blogspot\.com/\d+/.*\.html$").unwrap();

if let Some(div) = document.select(&div_selector).next() {

let hrefs = div

.select(&a_selector)

.filter_map(|a| a.value().attr("href"))

.filter(|href| regex.is_match(href))

.map(String::from)

.collect::<HashSet<_>>();

return Ok(hrefs);

}

Ok(HashSet::new())

}

pub fn scrape_base_page_post_links(

base_url: &str,

) -> Result<HashSet<String>, Box<dyn std::error::Error>> {

let html = helpers::fetch_html(base_url)?;

let document = Html::parse_document(&html);

extract_post_links(&document)

}

pub fn scrape_all_post_links(

base_url: &str,

) -> Result<HashSet<String>, Box<dyn std::error::Error>> {

let mut all_links = HashSet::new();

let mut current_url = base_url.to_string();

let mut button_count = 0;

let progress_bar = ProgressBar::new_spinner();

progress_bar.set_style(

ProgressStyle::default_spinner()

.template("{spinner:.green} [{elapsed_precise}] {msg}")

.unwrap(),

);

loop {

let html = helpers::fetch_html(¤t_url)?;

let document = Html::parse_document(&html);

let links = extract_post_links(&document)?;

all_links.extend(links);

progress_bar.set_message(format!(

"Found {} links. Older posts clicked {} times.",

all_links.len(),

button_count

));

progress_bar.tick();

button_count += 1;

if let Some(next_url) = helpers::find_older_posts_link(&document) {

if next_url == current_url {

println!("Pagination loop detected: {}", next_url);

break;

}

current_url = next_url;

} else {

println!("No 'Older Posts' link found on page");

break;

}

}

progress_bar.finish_with_message("Initial post link scraping has finished");

Ok(all_links)

}

pub fn fetch_and_process_with_retries(

url: &str,

logfile: Arc<Mutex<File>>,

) -> Result<Post, Box<dyn std::error::Error>> {

let mut attempts = 0;

loop {

attempts += 1;

match fetch_and_process_post(url) {

Ok(post) => {

return Ok(post);

}

Err(e) => {

if attempts >= MAX_RETRIES {

return Err(e);

} else {

let mut log = logfile.lock().unwrap();

writeln!(

log,

"[WARN] Failed to scrape post: {} on attempt {}/{}. Retrying after delay...",

url, attempts, MAX_RETRIES

)

.ok();

thread::sleep(RETRY_DELAY);

}

}

}

}

}

fn fetch_and_process_post(url: &str) -> Result<Post, Box<dyn std::error::Error>> {

let html = helpers::fetch_html(url)?;

let document = Html::parse_document(&html);

let title_selector = Selector::parse("title")?;

let date_header_selector = Selector::parse(".date-header")?;

let post_body_selector = Selector::parse(".post-body.entry-content")?;

let title = document

.select(&title_selector)

.next()

.ok_or("Title not found")?

.inner_html()

.replace("Gnostic Esoteric Study & Work Aids: ", "");

let id = helpers::extract_id_from_title(&title);

let content = document

.select(&post_body_selector)

.filter_map(|element| {

let text = element.text().collect::<Vec<_>>().join(" ");

if !text.is_empty() {

Some(text)

} else {

None

}

})

.next()

.ok_or("Post body not found using any selector")?;

let date = document

.select(&date_header_selector)

.next()

.map(|n| n.text().collect::<Vec<_>>().join(" "));

let mut images = HashSet::new();

if let Some(post_outer) = document.select(&Selector::parse(".post-outer")?).next() {

let img_selector = Selector::parse("img")?;

for img in post_outer.select(&img_selector) {

if let Some(src) = img.value().attr("src") {

if !src.contains(".gif") && !src.contains("blogger_logo_round") {

let safe_src = if src.starts_with("//") {

format!("http:{}", src)

} else {

src.to_string()

};

images.insert(safe_src);

}

}

}

}

Ok(Post {

id,

title,

content,

URL: url.to_string(),

date,

images,

})

}

Main Method

The main method is responsible for parsing the command line arguments I've decided to use, setting up a logfile, and timing the program. I've created a separate code path to only scrape the initial landing page and provide these posts to a "recents" tab in a mobile app.

use clap::Parser;

use serde::{Deserialize, Serialize};

use std::collections::HashSet;

use std::sync::{Arc, Mutex};

use std::time::Instant;

mod helpers;

mod scrapers;

#[derive(Parser, Debug, Clone)]

#[command(

author = "John Harrington",

version = "1.0",

about = "Scrapes posts from a Blogger website"

)]

struct Cli {

/// Sets the number of threads to use when scraping all post links

#[arg(short, long, default_value_t = 4)]

threads: usize,

/// Scrapes only recent posts from the blog homepage without clicking 'Older Posts'

#[arg(short, long)]

recent_only: bool,

}

// TODO it may make sense to implement Ord for Post

// but sometimes it makes sense to sort by date, others id

// for now specific functions will be used

#[allow(non_snake_case)]

#[derive(Serialize, Deserialize, Debug, Clone)]

struct Post {

id: Option<String>,

title: String,

content: String,

URL: String,

date: Option<String>,

images: HashSet<String>,

}

fn main() -> Result<(), Box<dyn std::error::Error>> {

let args = Cli::parse();

let error_written = Arc::new(Mutex::new(false));

let log_file = helpers::create_log_file()?;

let base_url = "https://gnosticesotericstudyworkaids.blogspot.com/";

let search_timer = Instant::now();

let mut backup = scrapers::search_and_scrape(

args.clone(),

error_written.clone(),

log_file.clone(),

base_url,

)?;

let search_duration = search_timer.elapsed();

let minutes = search_duration.as_secs() / 60;

let seconds = search_duration.as_secs() % 60;

println!("Searching and scraping took {:02}:{:02}", minutes, seconds);

helpers::sort_backup(&mut backup)?;

let output_file = if args.recent_only {

"recents.json"

} else {

"backup.json"

};

helpers::write_to_file(&backup, output_file)?;

let error_written = Arc::try_unwrap(error_written)

.unwrap()

.into_inner()

.unwrap();

if error_written {

eprintln!("One or more errors ocurred... See log.txt for more information. It may be necessary to re-run using fewer threads");

} else {

println!("Be sure to check log.txt for any warnings. If many WARNS occurred, you may want to run with fewer threads.");

println!("If no WARNS occurred, you may try increasing the thread pool using -t <num_threads> to speed things up.")

}

if !args.recent_only {

helpers::find_duplicates(&backup, log_file.clone());

helpers::find_missing_ids(&backup, log_file.clone())?;

}

Ok(())

}

Update: Reading existing JSON into memory to prevent redundant scraping

As I was using the CLI I realized quickly how much work it repeats on every run. I decided I could prevent this by reading the JSON data into memory and then when post links are collected I could skip that link if it was present in the existing JSON.

First I wrote a new function to read the JSON file into memory as a Vec of Posts:

pub fn read_posts_from_file(path: &std::path::Path) -> Result, Box> {

let backup_file = File::open(path)?;

let reader = std::io::BufReader::new(backup_file);

let posts: Vec = serde_json::from_reader(reader)?;

Ok(posts)

}

Next I needed to modify my existing functions:

- I've wrapped an empty Vec of Posts in a Mutex wrapped inside of an Arc because down the road I want to experiment with this initial step of scraping links across multiple threads. Even though I am not sharing the Vec across threads now I don't think the overhead of acquiring a lock on the mutex is significant compared to the bottleneck of making the HTTP GET requests.

-

I use a match statement to determine if the

read_posts_from_file()function was able to find the specified JSON file. If it did, theOkvariant will execute where the resulting Vec is checked to see if it is non-empty, and if so, used as existing backup data. -

I use an

Errvariant to further match on errors I consider most likely: either the JSON file doesn't exist because this program has never ran or it has been deleted, a file permission error, or any other IO error. I've added some logging for deserialization errors and any other errors as well. -

Now when

scrape_all_post_links()is called, it will either have an empty Vec of Posts or a Vec containing previously found posts.

pub fn search_and_scrape(

args: Cli,

error_written: Arc>,

log_file: Arc>,

base_url: &str,

) -> Result, Box> {

let backup: Arc>> = Arc::new(Mutex::new(Vec::new()));

match helpers::read_posts_from_file(Path::new(BACKUP_FILE_PATH)) {

Ok(file_backup) => {

if !file_backup.is_empty() {

println!(

"{} was found and will be used to load previously archived posts",

BACKUP_FILE_PATH

);

match backup.lock() {

Ok(mut backup_guard) => {

println!(

"Successfully loaded {} posts from backup",

&file_backup.len()

);

*backup_guard = file_backup;

}

Err(e) => {

eprintln!("Failed to acquire lock on backup: {}", e);

}

}

} else {

println!(

"{} was found but didn't contain any posts",

BACKUP_FILE_PATH

);

}

}

Err(e) => {

if let Some(io_error) = e.downcast_ref::() {

match io_error.kind() {

std::io::ErrorKind::NotFound => {

println!("No backup file found matching {}", BACKUP_FILE_PATH);

}

std::io::ErrorKind::PermissionDenied => {

eprintln!("Permission denied when trying to read {}", BACKUP_FILE_PATH);

}

_ => eprintln!("IO error reading backup file: {}", io_error),

}

} else if e.is::() {

eprintln!("Failed to parse JSON in backup file: {}", e);

} else {

eprintln!("Unexpected error reading backup file: {}", e);

}

}

}

let post_links: HashSet = if args.recent_only {

scrape_base_page_post_links(base_url)?

} else {

scrape_all_post_links(base_url, backup.clone())?

};

println!(

"{} posts were found and will now be scraped",

post_links.len()

);

...

}

- Now when post links are found they will be filtered out if they are present in any of the existing Posts.

pub fn scrape_all_post_links(

base_url: &str,

backup: Arc>>,

) -> Result, Box> {

let archived_links: HashSet = match backup.lock() {

Ok(backup_handle) => backup_handle.iter().map(|post| post.URL.clone()).collect(),

Err(e) => {

eprintln!(

"Failed to acquire lock on backup while obtaining previously archived posts: {}",

e

);

HashSet::new()

}

};

let mut all_links = HashSet::new();

let mut current_url = base_url.to_string();

let mut visited_urls = HashSet::new();

visited_urls.insert(current_url.clone());

let mut button_count = 0;

let progress_bar = ProgressBar::new_spinner();

progress_bar.set_style(

ProgressStyle::default_spinner()

.template("{spinner:.green} [{elapsed_precise}] {msg}")

.unwrap(),

);

loop {

let html = helpers::fetch_html(¤t_url)?;

let document = Html::parse_document(&html);

let new_links: HashSet = extract_post_links(&document)?

.into_iter()

.filter(|link| !archived_links.contains(link))

.collect();

let new_links_count = &new_links.len();

all_links.extend(new_links);

...

}

...

}