A Hashing Service Written in Rust

I created a mobile application to stream audio and display an image associated with the content being played. A third-party API was used to obtain a URL that pointed to a remote image corresponding to the content. I used a Flutter stream to listen to the URL and display the associated image. Originally I added logic to the stream to download and display the most recently published image at a set time interval. However, this wasn't optimal. Most audio streams last one hour, but could range in duration from twenty minutes to several hours. A more efficient solution that didn't use so much network bandwidth was necessary.

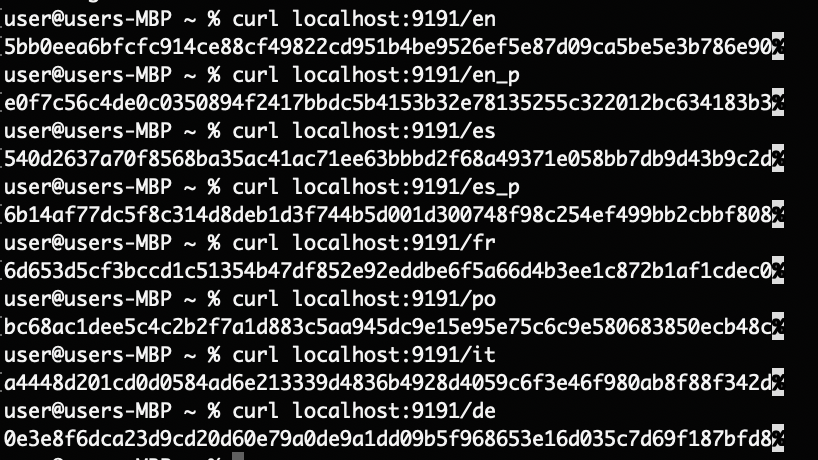

My solution was to implement a back-end service to download and hash the images corresponding to each channel in the streaming application. These hashes would be exposed as endpoints on a server and instead of downloading an entire image file at a set interval, I modified the stream logic to instead request a SHA256 hash of the image. So long as the hash didn't change, there would be no reason to download the remote image. This dramatically reduced the network and power consumption of my application. I hosted this back-end service inside of an AWS EC2 instance, and because EC2 instances are billed for data egressing from the instance, and not for data ingressing into the instance, this resulted in extremely minimal cost.

Program imports and setup

- This package makes use of common modules from the standard library, as well as third-party crates such as Actix-Web for running a reputable and secure web-server, sha2 for hashing the remote images, Serde to deserialize a TOML file used to store the API endpoints that expose the remote image URLs, Tokio to support non-blocking async operations, chrono to log date and time information of requests, and OpenSSL to support TLS.

- I've defined a constant to store the interval at which to download and hash the remote images.

-

I've defined a

Configstruct that only contains a nestedSecretsstruct. This is used to load the remote image URLs without checking these into public source control. -

The

AppStatestruct is used to hold the most recent image hashes. These are guarded by a mutex ensuring the service doesn't respond with a partial hash while the variable is being written to. This struct also contains aNotifysignal (from the Tokio crate) to signal when an update has occurred. -

I've written an implementation on the

Configstruct that is used to read the secret URLs from a TOML file into program memory.

use std::{

sync::{Arc, Mutex},

time::Duration,

fs::read_to_string,

};

use actix_web::{get, web, App, HttpServer, HttpResponse};

use actix_web::middleware::Logger;

use reqwest;

use sha2::{Digest, Sha256};

use serde_derive::Deserialize;

use tokio::sync::Notify;

use chrono::prelude::*;

use openssl::ssl::{SslAcceptor, SslFiletype, SslMethod};

const REFRESH_HASH_IN_SECONDS: u64 = 60;

#[derive(Debug, Deserialize)]

struct Config {

secrets: Secrets,

}

#[derive(Debug, Deserialize)]

struct Secrets {

en_image: String,

en_image_p: String,

es_image: String,

es_image_p: String,

fr_image: String,

po_image: String,

it_image: String,

de_image: String,

}

struct AppState {

en_image_hash: Mutex,

en_p_image_hash: Mutex,

es_image_hash: Mutex,

es_p_image_hash: Mutex,

fr_image_hash: Mutex,

po_image_hash: Mutex,

it_image_hash: Mutex,

de_image_hash: Mutex,

notify: Notify,

}

impl Config {

fn load_from_file(filename: &str) -> Result> {

let config_str = read_to_string(filename)

.map_err(|err| format!("Unable to read config file: {}", err))?;

let config: Config = toml::from_str(&config_str)

.map_err(|err| format!("Unable to parse config file: {}", err))?;

Ok(config)

}

}

Downloading and hashing remote images

-

I've used a declarative macro to

contain reusable logic that will perform the work of downloading an image, hashing it,

and storing the hash in the

AppStatestruct. - Macros were new to me when I started exploring Rust. I was familiar with template metaprogramming from a course devoted to Code Optimization in C++ but unlike metaprogramming in other languages macros in Rust are less of a niche and more of a foundational feature of the language.

- For documentation regarding a very commonly used macro in Rust see std::println. This is another declarative macro.

- The Rust book states: "At their core, declarative macros allow you to write something similar to a Rust match expression. As discussed in Chapter 6, match expressions are control structures that take an expression, compare the resulting value of the expression to patterns, and then run the code associated with the matching pattern. Macros also compare a value to patterns that are associated with particular code: in this situation, the value is the literal Rust source code passed to the macro; the patterns are compared with the structure of that source code; and the code associated with each pattern, when matched, replaces the code passed to the macro. This all happens during compilation."

-

The

download_and_hash_imagemacro I wrote expects three arguments preceded by a dollar sign which indicates that which follows will be replaced in the generated code by the value passed to the macro invocation. -

Here

$state_murepresents a mutex from theAppStatestruct,$imagerepresents a string that points to a remote image URL, and$state_notifycorresponds to thetokio::sync::notifyinstance used to notify theAppStatestruct that a new hash is available. - The macro definition contains an outer match arm that will download the remote image to memory, and an inner match arm that will return the image data on success and log an error message if an error occurs.

-

Next the image is hashed and this hash is stored in the

AppStatestruct. - Logically this seems very similar to how a function would be used in other languages, and it is. There is no reason why a function couldn't have been used instead of a macro, I was just looking for an excuse to write a macro in Rust.

-

The

download_and_hash_imagesfunction reads the remote image URLs from theConfigstruct and passes these as arguments to the macro described above, along with the other required arguments. -

This function will call the

download_and_hash_imagemacro for each image URL as often as the constantREFRESH_HASH_IN_SECONDSspecifies, using thetokio::time::sleep()function. - Finally, I've written a macro to generate endpoint functions at compile time.

macro_rules! download_and_hash_image {

($state_mu:expr, $image:expr, $state_notify:expr) => {

let image_data = match reqwest::get($image).await {

Ok(response) => match response.bytes().await {

Ok(data) => data,

Err(e) => {

let now: DateTime = Utc::now();

eprintln!("{} : Error reading response bytes: {}", now, e);

continue;

}

},

Err(e) => {

let now: DateTime = Utc::now();

eprintln!("{} : Error fetching image: {}", now, e);

continue;

}

};

let hash = format!("{:x}", Sha256::digest(&image_data));

{

let mut image_hash = $state_mu.lock().unwrap();

*image_hash = hash.clone();

$state_notify.notify_one();

}

};

}

async fn download_and_hash_images(state: Arc, config: Config) {

let en_image = config.secrets.en_image.clone();

let en_p_image = config.secrets.en_image_p.clone();

let es_image = config.secrets.es_image.clone();

let es_p_image = config.secrets.es_image_p.clone();

let fr_image = config.secrets.fr_image.clone();

let po_image = config.secrets.po_image.clone();

let it_image = config.secrets.it_image.clone();

let de_image = config.secrets.de_image.clone();

loop {

download_and_hash_image!(state.en_image_hash, &en_image, state.notify);

download_and_hash_image!(state.en_p_image_hash, &en_p_image, state.notify);

download_and_hash_image!(state.es_image_hash, &es_image, state.notify);

download_and_hash_image!(state.es_p_image_hash, &es_p_image, state.notify);

download_and_hash_image!(state.fr_image_hash, &fr_image, state.notify);

download_and_hash_image!(state.po_image_hash, &po_image, state.notify);

download_and_hash_image!(state.it_image_hash, &it_image, state.notify);

download_and_hash_image!(state.de_image_hash, &de_image, state.notify);

tokio::time::sleep(Duration::from_secs(REFRESH_HASH_IN_SECONDS)).await;

}

}

macro_rules! create_hash_endpoint {

($state_field:ident, $route:expr) => {

#[get($route)]

async fn $state_field(state: web::Data>) -> HttpResponse {

let image_hash = state.$state_field.lock().unwrap();

HttpResponse::Ok().body(image_hash.clone())

}

};

}

create_hash_endpoint!(en_image_hash, "/en");

create_hash_endpoint!(en_p_image_hash, "/en_p");

create_hash_endpoint!(es_image_hash, "/es");

create_hash_endpoint!(es_p_image_hash, "/es_p");

create_hash_endpoint!(fr_image_hash, "/fr");

create_hash_endpoint!(po_image_hash, "/po");

create_hash_endpoint!(it_image_hash, "/it");

create_hash_endpoint!(de_image_hash, "/de");

- I am really starting to appreciate the value that macros provide: this has saved a lot of boiler plate code. Instead of defining eight endpoint functions with nearly identical logic I have written one macro that will perform the work of attributing and naming each actual endpoint function at compile time.

-

The

$state_fieldwill be the endpoint function name in the generated code. -

The

$routewill correspond to the actual endpoint on the server, shown as the attribute above the endpoint function.

Putting it all together

-

The

main()function has an attribute identifying it as the entry point for an Actix-Web application. -

An instance of the

AppStatestruct is created inside of anArc. - An arc is a thread-safe reference-counting pointer. 'Arc' stands for 'Atomically Reference Counted'. This is necessary because of how Rust handles ownership and borrowing. This is a huge topic, but it is the main reason why Rust is able to create efficient code guaranteeing memory safety without using a garbage collector, thereby eliminating overhead and increasing performance.

-

The

Arcis used to safely share the data contained insideAppStateacross multiple threads concurrently. The Actix-web server will handle multiple HTTP GET requests simultaneously and each request may be processed on its own thread. Without theArcsynchronization issues and race conditions would likely occur. -

The

Archas an internal counter that is incremented each time theAppStatestruct is cloned. When no more references to the sharedAppStateexist the counter will equal 0 and Rust can move this resource out of scope. This will only happen when the web server is stopped and themain()function exits. -

The first clone of the

AppStatestruct is used when thetokioruntime spawns a thread devoted to downloading and hashing the remote images, using the logic described earlier. -

Next, the TLS connection is configured and the

HttpServercaptures a second clone of theAppStatestruct thereby assuming ownership of it. - Logging middleware is added and the endpoint functions generated earlier by the second macro are registered with the web server.

#[actix_web::main]

async fn main() -> std::io::Result<()> {

let app_state = Arc::new(AppState {

en_image_hash: Mutex::new(String::new()),

en_p_image_hash: Mutex::new(String::new()),

es_image_hash: Mutex::new(String::new()),

es_p_image_hash: Mutex::new(String::new()),

fr_image_hash: Mutex::new(String::new()),

po_image_hash: Mutex::new(String::new()),

it_image_hash: Mutex::new(String::new()),

de_image_hash: Mutex::new(String::new()),

notify: Notify::new(),

});

let app_state_clone = Arc::clone(&app_state);

tokio::spawn(async move {

let config = Config::load_from_file("Config.toml").unwrap_or_else(|e| {

eprintln!("Error loading config: {}", e);

std::process::exit(1);

});

download_and_hash_images(app_state_clone, config).await;

});

let mut builder = SslAcceptor::mozilla_intermediate(SslMethod::tls()).unwrap();

builder

.set_private_key_file("certs/key.pem", SslFiletype::PEM)

.unwrap();

builder

.set_certificate_chain_file("certs/cert.pem")

.unwrap();

HttpServer::new(move || {

App::new()

.app_data(web::Data::new(app_state.clone()))

.wrap(Logger::default())

.service(en_image_hash)

.service(en_p_image_hash)

.service(es_image_hash)

.service(es_p_image_hash)

.service(fr_image_hash)

.service(po_image_hash)

.service(it_image_hash)

.service(de_image_hash)

})

.bind_openssl("0.0.0.0:9191", builder)?

.run()

.await

}

I learned a lot from this project and would really like to continue using Rust for any future projects. I also wrote Actix Web middleware to rate-limit these endpoints, or any routes on an Actix Web server. Check it out here.